Two great reads on history, memory, and fun

/History, by Frederick Dielman, in the Library of Congress Thomas Jefferson Room

I don’t know about y’all, but I occasionally need a reminder of why I do what I do. This is especially the case at the end of a long and busy semester. Here are two pieces on the purpose and value of history that gave me precisely the reminders I needed, and which I want to endorse and recommend:

“The Claims of Memory,” by Wilfred M McClay

Wilfred McClay’s is the longer and more philosophical and meditative of these two pieces, and was originally delivered as First Things magazine’s 34th Erasmus Lecture this October.

History, for McClay, is not simply a list of dates to memorize (the high school football coach method) or a jumbled rush of unrelated discrete events (“one damned thing after another”), but a form of memory that transcends and enlarges individual human memory—an attitude dating back at least to Cicero, who wrote that “Not to know what happened before you were born is to be a child forever.” (I have this line on my office door.) McClay agrees, and develops the metaphor further, arguing that not only is memory essential to maturation, but loss of memory is fatally debilitating not only to the individual but the society. He invokes Alzheimer’s as an example of how the loss of one person’s memory can affect multitudes. Recall the concept of transactive memory I wrote about earlier this year.

But memory is also tricky, subject not only to ageing and degradation but to vaguery and distortion, and simply amassing more and more empirically determined data may only make that worse. Drawing on another example of memory gone wrong, a Russian psychological patient who could recall literally everything he had ever seen but could not organize those things into coherent, generalized understanding (what would probably happen to a real Will Hunting), McClay also makes room for the necessity of forgetting. “What makes for intelligent and insightful memory,” he argues, “is not the capacity for massive retention, but a balance in the economy of remembering and forgetting.”

But, McClay notes, “there are crucial differences” between individual memory, even in senility, and history as a profession and a cultural tradition:

No one can be blamed for contracting Alzheimer’s disease, an organic condition whose causes we do not fully understand. But the American people can be blamed if we abandon the requirement to know our own past, and if we fail to pass on that knowledge to the rising generations. We will be responsible for our own decline. And our society has come dangerously close to this very state. Small wonder so many young Americans now arrive at adulthood without a sense of membership in a society whose story is one of the greatest enterprises in human history. That this should be so is a tragedy. It is also a crime, the squandering of a rightful inheritance.

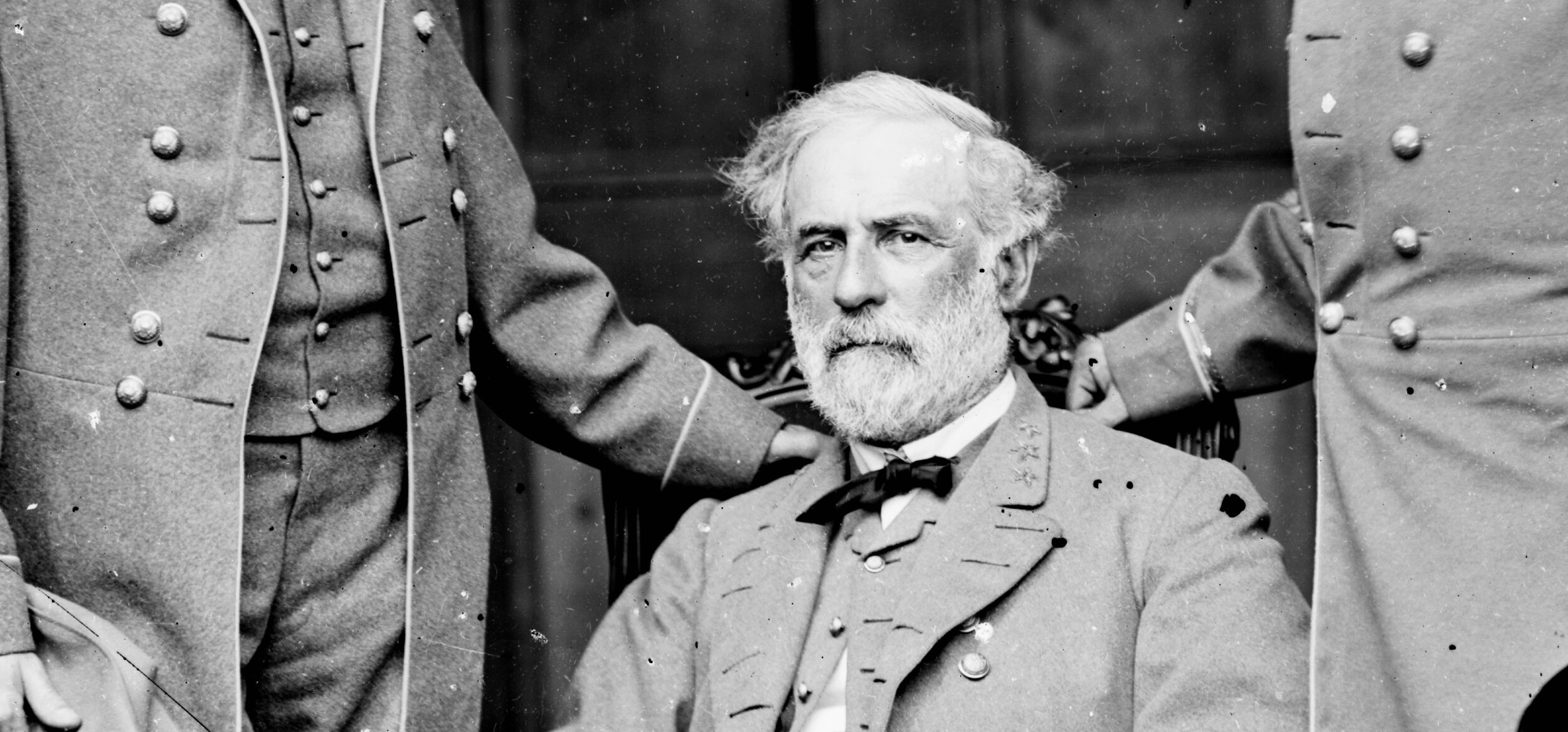

This squandering “goes far beyond bad schooling and an unhealthy popular culture” to a censorious, “imperious” and “ever-grinding machine of destruction and reconstruction” that “makes it difficult to commemorate anything that is more than a few years old.”

The whole proposition of memorializing past events and persons, particularly those whose lives and deeds are entwined with the nation-state, has been called into question by the prevailing ethos, which cares nothing for the authority of the past and frowns on anything that smacks of hero worship or filial piety.

Invoking pietas is really speaking my language. McClay is here describing what Roger Scruton called a “culture of repudiation,” and McClay offers incisive critiques of the way academic faithlessness toward the duty to preserve memory translates into popular indifference or, for the “woke,” outright hostility toward the past.

[W]e are rendering ourselves unable to enjoy such things, unless some moral criterion is first met. That inability arises, I fear, from guilt-haunted hearts that are unable to forgive themselves for the sin of being human and cannot bear their guilt except by projecting it onto others. The rest of us should firmly refuse that projection and recognize this post-Christian tyranny for what it is.

This is an excellent, wide-ranging, and thoughtful examination of a problem I care a lot about, and I hope you’ll read it. You can find the whole piece here. A recording of the lecture is also available on Vimeo, though I haven’t been able to play it on my machine. I hope y’all will have better luck.

McClay wrote Land of Hope, a one-volume narrative history of the United States that was one of my favorite books of 2020—a year when our need for appreciative but not uncritical memory became especially apparent. I quoted a longish excerpt in which McClay makes the case for narrative history here.

“Make History Great Again!” by Dominic Sandbrook

British historian Dominic Sandbrook’s piece is the shorter and punchier of the two, and begins with a question near to my heart: “Why don’t today’s children know more about history?”

I’ve cared about this topic for a long time—first as I figured out how I came to love history as a kid, then as I figured out how to get my students to love history, and now, even more pressingly, as I figure out how to pass on my love of history and even some of my own history to my own children. It’s an important question for all the reasons McClay lays out in his piece.

Sandbrook suggests that the problem is that history, roped off and quarantined lest anyone catch cooties from old ideas we don’t approve of, has been made uninteresting to children—not only in terms of content, presenting “issues” and “forces” rather than events and personalities, but because of the tone with which this history is presented:

In recent years, the culture around our history has been almost entirely negative. Statues are toppled, museums ‘decolonised’, heroes ‘re-contextualised’, entire generations of writers and readers dismissed as reactionaries. When Britain’s past appears in the national conversation, it’s almost always in the context of controversy, apology and blame. . . .

Against this background, who’d choose to study history? For that matter, who’d be a history teacher? Even selecting a topic for your Year 4 children seems full of danger, with monomaniacal zealots poised to denounce you for reactionary deviation. And all the time you’re bombarded with ‘advice’, often in the most strident and intolerant terms.

This presentation is “at once priggish, hand-wringing and hectoring, forever painting our history as a subject of shame.” It also oversimplifies, and the oversimplified is always deadly dull. Complexity excites, especially once a student is immersed enough in a particular time and place to get the thrill of piecing seemingly disparate parts of a narrative together. But that requires imagination, which rebels at dullness.

Part of this dull simplicity is the prevalence of one permissible narrative, a vision or set of emphases to which it is morally imperative that all others be subordinated. Sandbrook invokes the manner in which the UK’s National Trust suggests educators use England’s stately old country houses to cudgel unsuspecting students:

The National Trust’s much-criticised dossier about its country houses’ colonial connections opens by talking of the ‘sometimes uncomfortable role that Britain, and Britons, have played in global history’, and piously warns the reader that our history is ‘difficult to read and to consider’. The Trust’s Colonial Countryside Project encourages creative writing about ‘the trauma that underlies’ many country houses. In other words, drag the kids around an old property and make them feel miserable. Maybe I’m wrong, but I doubt that’ll make historians of them.

He’s not wrong. Admiring the house and its long-lasting beauty and imagining yourself living there—the natural impulses of a healthy child in an excitingly the concretely alien place—would seem to invite punishment.

Sandbrook examines as well the way American-centric concerns have taken over even the British imagination, all in the name of giving children something actionable and, well—a word I’ve inveighed against here before: “Behind this lurks the spectre of ‘relevance’, a word history teachers ought to treat with undiluted contempt.” Hear hear!

History isn’t about you; that’s what makes it history. It’s about somebody else, living in an entirely different moral and intellectual world. It’s a drama in which you’re not present, reminding you of your own tiny, humble place in the cosmic order. It’s not relevant. That’s why it’s so important.

As much as all of the above had me pounding my desk in approval, it is all preparatory to Sandbrook’s positive recommendations on how to make children interested in history again: story, setting, and people—the narrative elements we are wired to respond to, to build our lives around and to emulate, all of which begins in the molding of the affections and the imagination. And that begins in childhood:

So how should we write history for children? The answer strikes me as blindingly obvious. As a youngster I was riveted by stories of knights and castles, gods and pirates. What got me turning the pages wasn’t the promise of an ‘uncomfortable’ conversation. It was the prospect of a good story. Alexander the Great crossing the Afghan mountains, Anne Boleyn pleading for her life on the way to the scaffold, Britain’s boys on the beaches of Dunkirk, Archduke Franz Ferdinand taking the wrong turn at the worst possible moment... that’s more like it, surely?

Add to all of this “an attitude,” specifically that of an open-minded traveler visiting alien lands—about which more below—with the first and most obvious benefit of travel as his goal:

Exploring that vast, impossibly rich country ought to be one of the most exciting intellectual adventures in any boy or girl’s lifetime—not an exercise in self-righteous mortification. Put simply, it should be fun.

Three cheers.

I’ve quoted extensively from this piece because it’s so good, but there’s more, and Sandbrook’s recommendations at the end are excellently put. Read it for those at least. You can read the whole piece at the Spectator here.

Sandbrook has published several volumes of history both for adults and children. I’m sorry to say I haven’t read them, though I’m awaiting the arrival of his children’s Adventures in Time volume on World War II. He is also—with the great Tom Holland, whose Dominion was my other favorite historical work of 2020—a host of the podcast The Rest is History.

Conclusion

Something that struck me in these pieces is that, at one point in each, both invoke LP Hartley’s celebrated line that “The past is a foreign country.” I use that line, as well as the one on memory and maturity by Cicero above, to open every course I teach, every semester. I find it gets my approach across pretty well and primes the students for our study of the past to amount to more than names and dates.

Nevertheless, CS Lewis wrote in The Abolition of Man, a book especially concerned with the hollowing out of the purportedly educated, that “The task of the modern educator is not to cut down jungles but to irrigate deserts.” It’s true. But the desert is so dry and the digging so relentless that I end most semesters not just weary but exhausted. Spent. Nearly despairing. This semester was no exception.

So I’m grateful to McClay and Sandbrook for breathing some life back into me and reminding me not only of what’s at stake, but how much fun real, good history can be—and should be.